In the world of accessible communications, Ava understands exactly what it takes to make speech-to-text a life-improving communication technology for Deaf and hard-of-hearing people.

But what about skin-to-text. Are we moving toward a reality where speech becomes optional?

At roughly $2 billion, Apple’s acquisition of the secretive startup Q.ai is its largest deal since Beats, and it signals a future where your phone doesn’t need to hear you to know what you’re saying.

Gulp.

The most telling detail isn’t found in a formal press release. It’s actually hidden in plain sight on the LinkedIn profile of Q.ai’s founder, Aviad Maizels. His experience for Q.ai is a one-liner that reads: “Building something really cool. Can’t talk about it. Literally.” He can’t talk because of Apple’s legendary NDAs, but with Q.ai’s tech, he literally doesn't have to.

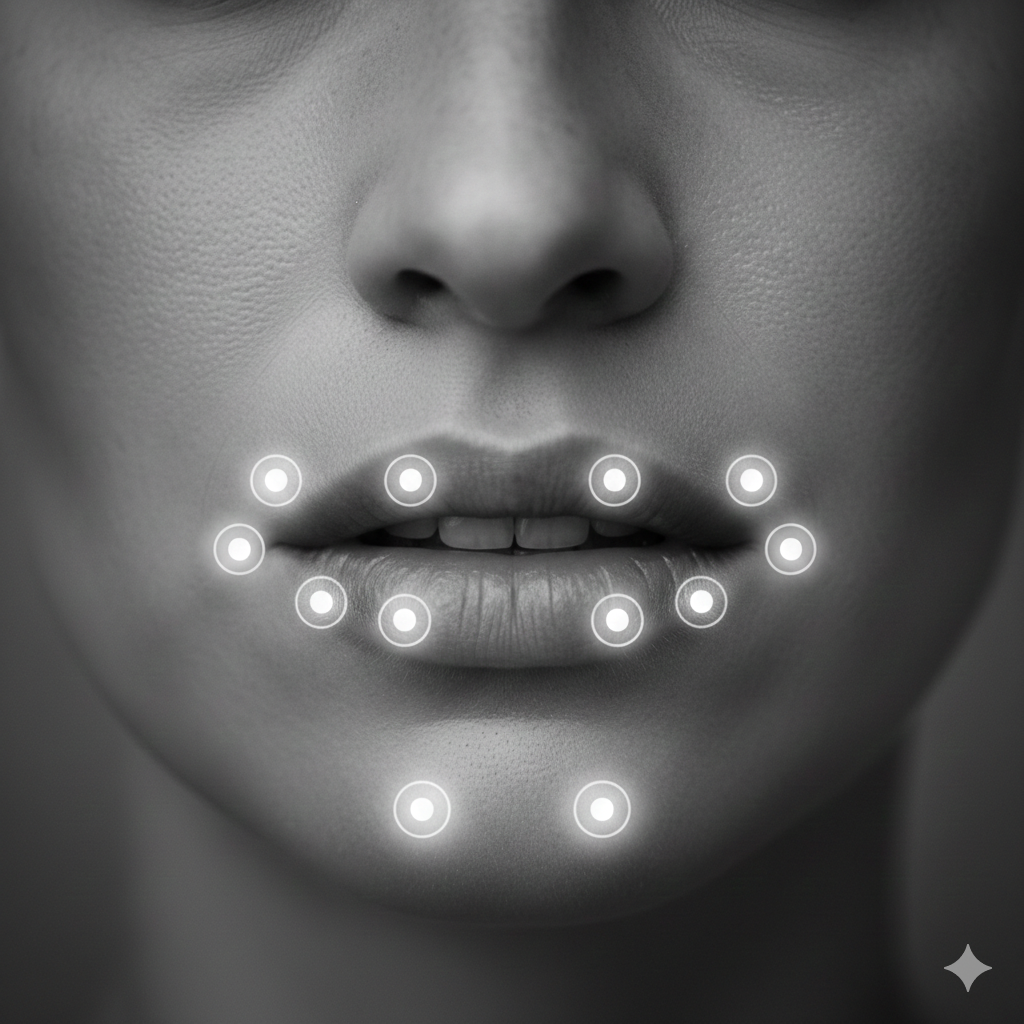

So what exactly is this tech that shall not be named? Q.ai uses imaging and machine learning to detect facial skin micro movements — the tiny, often imperceptible muscle movements that occur when a person mouths words or even prepares to speak without making a sound or even even thinks about speaking, capturing the physical intent before the word is fully formed.

If you feel creeped out, you aren't alone.

This brings us closer to a Blade Runner reality where your device is less of a tool and more of a facial mind reader, and this time it’s not to ferret out the androids.

The Biometric Whisperer

But for some, this isn't science fiction. It’s yet another AI extension into the physical world. Deaf people who lip-read, or "speech-read," have always had a unique superpower where they can look across a room and understand a conversation.

But that power has limits. It is an exhausting mental marathon involving significant guesswork because many sounds look identical on the lips. To a human eye, a "p" and a "b" are indistinguishable, as are "m," "n," and "ng."

Apple is essentially taking this human superpower and AI-ifying it. Google already has a lip-reading AI, LipNet. But Q.ai’s technology goes well beyond just interpreting mouth movement; it "reads" the skin.

Scared Yet?

While we don’t know every detail since Apple and Q.ai went hush-hush before and after the acquisition, patents show the tech uses infrared light and high-frame-rate sensors to map the perioral zone around the mouth. By tracking the tension and movement of the skin itself, Apple can translate those micro-shudders into words with a level of accuracy that makes traditional microphones feel primitive.

This is how flat-footed Apple intends to leapfrog Meta and Google in the AI uber race. While competitors are focused on better microphones or large language models in the cloud, Apple is securing a proprietary, "invisible" input layer that lives entirely on your face.

By integrating these sensors into future AirPods, Vision Pro, or the rumored Apple Glasses, they are creating an environment where you can have a private, non-verbal conversation with Siri in a crowded subway or a loud concert. In combination with speech recognition, this could supercharge hearing aids and accessibility devices, allowing them to isolate a single person's audio or intent even in the loudest conditions.

Pocket Lie Detector?

Amazing, but… doesn’t a device that reads your skin raise just a few ethical questions. If your headphones can detect your silent intent, are they also a "lie detector" in your pocket?

Q.ai’s patents suggest the tech can also assess emotional states, heart rate, and respiration. Apple will likely lean on its "Private Cloud Compute" architecture to argue that this biometric data never leaves the device, but aren’t there always hacks. And isn’t reading someone’s lips across a room a hack in it’s own right?

We are moving toward a form of communication that is more intimate than a whisper and more direct than a text. If Apple executes this intelligently, that future is coming — and it’s feeling almost too advanced.